For decades, the polygraph has been the most recognised tool in lie detection. Measuring physiological responses such as heart rate, skin conductivity, and respiration, the polygraph has played a role in investigations, security screenings, and even workplace disputes. Yet, as technology advances, the limitations of traditional lie detection are becoming clearer. This has opened the door to new solutions — particularly the rise of AI-powered multimodal approaches that combine multiple streams of data to improve accuracy.

From Polygraphs to Multimodal Systems

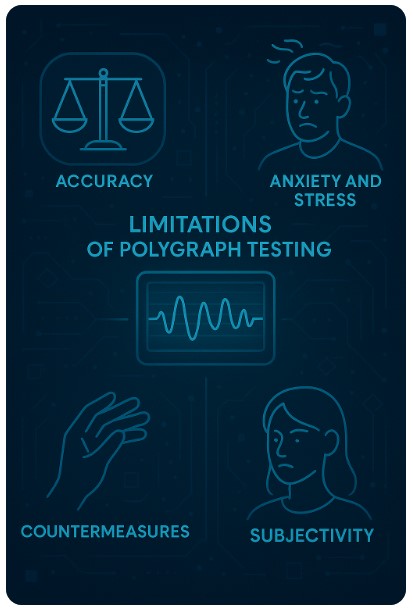

Polygraphs rely on a simple principle: deception creates stress, and stress produces physiological signals. But the challenge is that not all stress is deception. A nervous but truthful person may appear deceptive, while a skilled liar may mask their stress. This gap has encouraged researchers to explore more advanced methods.

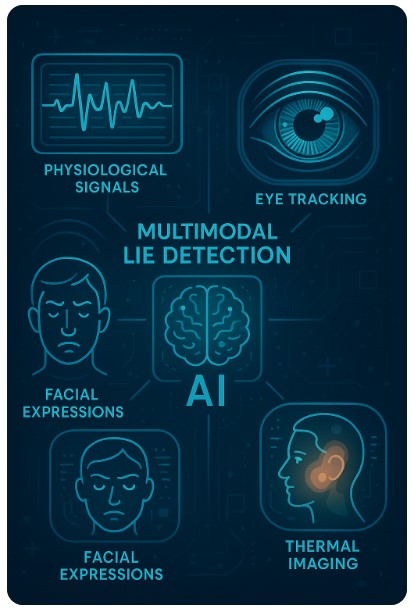

Enter multimodal systems. Instead of depending on one set of measurements, multimodal approaches bring together multiple indicators of truthfulness and deception. These may include:

- Physiological signals: Heart rate, blood pressure, galvanic skin response.

- Facial micro-expressions: Subtle involuntary muscle movements.

- Eye tracking and gaze behaviour: Blink rates, pupil dilation, fixation patterns.

- Voice and speech analysis: Changes in pitch, hesitation, or linguistic markers.

- Thermal imaging: Detecting heat changes in the face linked to stress.

By combining these signals, AI systems can analyse complex behavioural patterns that humans might miss. This offers the promise of greater accuracy and fewer false positives than polygraphs alone.

The Role of Artificial Intelligence

AI is the engine that makes multimodal lie detection possible. Traditional examiners are limited by how much they can process at once. AI systems, however, can rapidly analyse thousands of data points in real time.

Machine learning algorithms are trained on large datasets of both truthful and deceptive behaviour. Over time, these systems “learn” what deception looks like across different contexts. A good example is research into CogniModal-D, a dataset that combines physiological, facial, and audio cues to train multimodal deception detection systems [Nature].

The strength of AI lies in its ability to:

- Identify hidden patterns invisible to the naked eye.

- Integrate different data sources into one coherent judgment.

- Continuously improve accuracy as more data is collected.

However, as with all AI systems, the results are only as good as the data. Datasets that are too narrow or biased can produce skewed results. For example, systems trained primarily on Western populations may be less accurate in diverse cultural contexts.

Practical Applications

Multimodal lie detection is already moving beyond the laboratory and into practical use. Some of the key areas include:

- Law enforcement: Assisting in interrogations by flagging behavioural inconsistencies.

- Border security: Screening travellers with automated AI kiosks that combine facial recognition, voice analysis, and physiological sensors.

- Corporate investigations: Providing additional tools for workplace disputes or fraud inquiries.

- Insurance and finance: Supporting claims investigations where deception may cost millions.

In Canada, AI-powered lie detection kiosks are being trialled for immigration and security screening, using voice, eye tracking, and facial analysis to flag high-risk travellers [PR Newswire].

These applications show that multimodal lie detection isn’t a futuristic idea — it’s already here.

Challenges and Concerns

Despite the potential, multimodal AI approaches raise several important questions:

- Accuracy and reliability

While multimodal systems often outperform polygraphs in controlled tests, they are not infallible. High-stakes environments demand near-perfect accuracy, and false positives can have devastating consequences. - Bias and fairness

AI systems risk inheriting the biases of their training data. If the dataset lacks diversity, certain demographic groups may be unfairly flagged as deceptive [Nature]. - Ethics and privacy

Many of these technologies — thermal imaging, eye tracking, facial recognition — collect sensitive biometric data. Without strict regulation, there’s potential for misuse in surveillance or coercion. - Legal admissibility

Courts remain cautious. While polygraphs themselves are often inadmissible in UK and US courts, the introduction of AI-powered systems could face even more scrutiny [Issues.org]. Until standards are set, it’s unlikely these tools will replace traditional investigative techniques in court.

The Road Ahead

The future of lie detection is not about replacing human examiners but augmenting them. Skilled professionals will continue to play a vital role in interpreting results, ensuring fairness, and maintaining ethical standards. AI systems can act as powerful assistants, processing vast amounts of data and highlighting potential red flags.

For organisations considering lie detection services, the takeaway is this: the field is evolving rapidly. Multimodal, AI-driven tools are likely to become the new standard in the coming years. But until accuracy, ethics, and legal frameworks are fully aligned, these systems should be viewed as supportive technologies rather than absolute arbiters of truth.

Final Thoughts

At Lies2Light, we stay at the forefront of these developments. Our commitment is not just to use the best tools available, but to ensure that they are applied ethically, responsibly, and with full transparency. The future of lie detection may be high-tech, but at its heart, it still depends on trust, integrity, and expert judgment.